by Carsten Frommhold & Dr. Paul Elvers

Introduction

Training machine learning models on a local machine in a notebook is a common task among data scientists. It is the easiest way to get started, experiment and build a first working model. But for most businesses this is not a satisfying option: Today, making the most of your training data usually means to scale to gigabytes or petabytes of data, which do not easily fit into your local machine. Data is your most valuable asset and you would not want to use only small fraction of it due to technical reasons. Another problem that might occur when training a model on your local machine is putting it to use. A model that can only be used on your laptop is pretty useless. It should be available to a large group of consumers, deployed as a REST API or making batch predictions in a large data pipeline. If your model “works on your machine”, how does it get to production?

Another pitfall lies in the consistency between code used to train a model and the serialized model itself. By design, a notebook is well suited for exploratory analysis and development via trial and error. For example, it is possible to jump between individual cells. It is not enforced to keep a given top-down order. This makes it difficult to check whether the code exactly matches the model fit. Fitting a model in a notebook, persisting it, change the notebook afterwards and do a git commit is not a desired workflow and might lead to non-traceable issues. In an optimal workflow, models and associated code should be kept consistent with each other in the sense that it should be possible to fit the model in a new environment again.

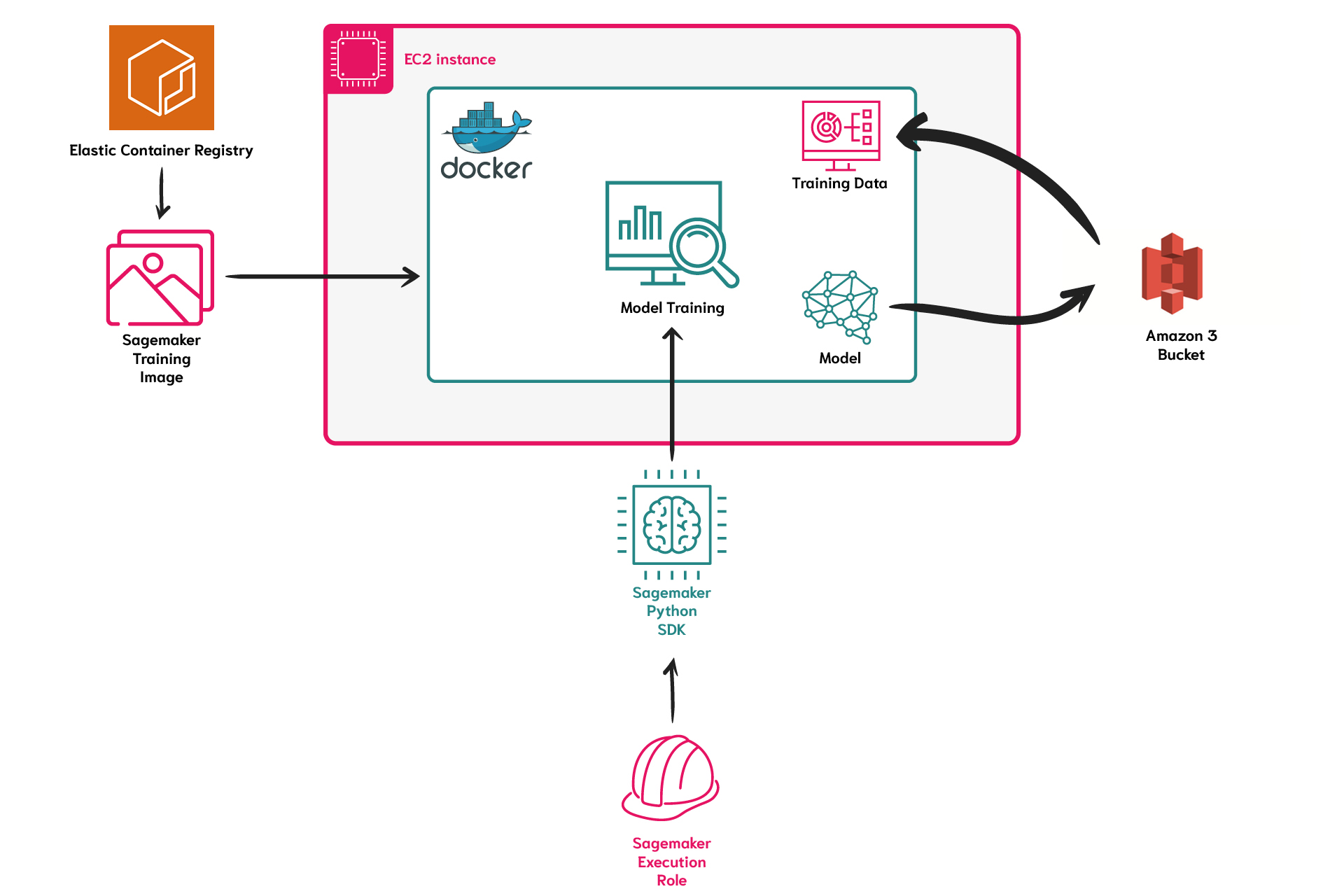

We demonstrate how many of the issues can be circumvented, by moving your training routine to the cloud. We use the AWS Service Sagemaker to execute a containerised training routine that will allow us to a) scale our training job to whatever sizes of training data, b) support our routines with single or multiple GPUs if needed, and c) easily deploy our model after the training has succeeded. The training and deployment routine will be written in a python script, which can easily be version controlled, and allows us to link code and model. The script is passed via the Sagemaker Python SDK to a container, running a docker image with all dependencies needed for the job. The main benefit of using Sagemaker is that instead of having to manage the infrastructure running the container, all the infrastructure management is done for you by AWS. All that is required is to make a few calls with the Python Sagemaker SDK and everything else is taken care of.

schematic illustration of a Sagemaker Training Job

Prerequisites

Data

In this example we will be using the Palmer Penguin Dataset, which provides a suitable alternative to the frequently used Iris dataset. It contains information about various penguins. You can read more about it here. The objective we will be solving with our machine learning algorithm is predicting the sex of a penguin (male/female) by using various attributes of the penguin (e.g. flipper length, bill length, species, island) as our features.

You can install palmerpenquins via pip.

We use the library dotenv to load the following environment variables. They will be needed for executing the job further below and contain information on the role used for execution as well as the paths to the data and the model storage.

As an alternative, you can define the desired input- and output paths directly and parse the Sagemaker excecution role.

Minimal data procesing is required: We drop entries with null values as well as duplicates. Then, we perform a train-test-split and save the data on our working directory as well as in AWS s3 storage.

Model training

To execute the training and deployment routine, we need to write a python script. The crucial part for the training lies in the main clause. It reads the data, instantiates a pipeline and trains the model. Here, a minimal preprocessing of one-hot-encoding and standard scaling is chosen. LogisticRegression acts as a baseline model. The model is then serialized and saved given the model directory.

The script takes four arguments. First, we need to define input path for the training data. It assumes the existence of two files: X_train.csv and y_train.csv. The differentiation between categorical and numerical variables is explicitly given in this example. Finally, the output path for the serialized model is defined. Using these arguments makes it convenient to run the training routine on our local machine and via Sagemaker in the cloud.

The script also contains several serving functions that Sagemaker requires for model serving via the Sagemaker model endpoint service. These functions comprise of model_fn() ensuring that the model gets loaded from file, input_fn() handling the input in a way that it can be used for calling the predict() function on the model, the predict_fn() which calls predict on the model and the output_fn(), which will convert the model output to a format that can be send back to the caller.

For testing purposes, the script is also callable on our local machine, or on the instance on which the notebook is running.

In order to run the training routine in the cloud, we use the SKLearn object from the Pyhton SDK. It is the standard interface for scheduling and defining model training and deployment of scikit-learn models. We specify the resources needed, the framework version, the entry point, the role as well as the output_path which will be the model-dir argument. Further arguments like the numerical and categorical feature list can be passed via the hyperparameters dictionary.

When calling fit(), Sagemaker will automatically launch a container with a scikit-learn image and execute the training script. The dictonary that we pass with a single keyword „train“ to the fit() function specifies the path to the processed data in S3. The training data is copied from there into the training container. The SKLearn object will move the model artifacts to the desired output path in S3, defined via the keyword „output_path“ in its definition.

Instantiating the SKLearn object and calling the fit() method are everything that is needed to launch the training routine as a containerized workflow. We could easily scale the resources, for example by choosing a bigger instance or use GPU support for our training. We could also make our calls automatically using a workflow orchestration tool such as Airflow or AWS Step Function, or trigger our training each time we merge our training routine with a CI/CD pipeline.

Model deployment

After evaluating our model, we can now go on and deploy it. To do so, we only have to call deploy() on the SKlearn object that we used for model training. A model endpoint is now booted in the background.

We can test the endpoint by passing the top 10 rows of our test dataset to the endpoint. We will receive labeled predictions as to whether the penguin is a male or a female based on it’s attributes.

At the end of our journey, the endpoint should be shut down.

Outlook

We demonstrated how a machine learning training routine can be moved to a cloud-based execution using a dedicated container in AWS. Compared to a local workflow it provides numerous advantages, such as scalability of compute ressources (one simply has to change parameters on the SKLearn object) and reproducibility of results: training scripts can easily be versioned and training jobs automatically be triggered, allowing to connect model training and version control. Most importantly, it reduces the barrier between development and production: Deploying a trained model only requires a single method call. One can find the resulting notebook on GitHub.

Of course, the example provided here is not solving all technical hurdles of MLOps and more features are usually needed to build a mature ml-platform. Ideally, we would want to have a model registry, where we store and manage models and artifacts, an experimentation tracking platform to log all our efforts to improve the model, and perhaps also a data versioning tool. But moving your local training routine into the cloud is already a big step forward!

There are also many possibilities to extend the training routine and adapt it towards specific needs: If special python libraries or dependencies are used for the algorithm, a custom docker image can be pushed to AWS Elastic Container Registry (ECR) and then be used in the Sagemaker training routine. If data processing becomes more complex, one can use a processing container to decapsulate this step. Also, if the endpoint is used in production, it is advisable to develop a REST API on top of the deployed Sagemaker endpoint, allowing to better handle security constraints as well as logical heuristics and preprocessing of your API calls. And of course a model evaluation step that calculates metrics on the vaildation data set should be included, but we will dive deeper into model evalutation in one of our next blog posts.

Stay tuned!